Exploring Edge AI: The Future of Artificial Intelligence

In recent years, the field of artificial intelligence (AI) has seen rapid advancements, with Edge AI emerging as one of the most promising technologies. As the demand for real-time data processing and decision-making grows, Edge AI offers a solution by bringing intelligence closer to the source of data generation.

What is Edge AI?

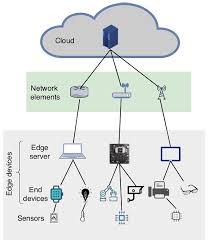

Edge AI refers to the deployment of artificial intelligence algorithms locally on devices at the edge of a network, rather than relying on a centralised cloud infrastructure. This approach enables data processing to occur directly on devices such as smartphones, IoT sensors, and autonomous vehicles. By processing data locally, Edge AI reduces latency and bandwidth usage while enhancing privacy and security.

Benefits of Edge AI

- Reduced Latency: By processing data locally, Edge AI significantly reduces the time it takes to analyse information and make decisions. This is crucial for applications that require immediate responses, such as autonomous driving or industrial automation.

- Enhanced Privacy: With data processed on-device rather than being sent to a central server, there are fewer opportunities for sensitive information to be intercepted or compromised.

- Lower Bandwidth Usage: Since data does not need to be transmitted back and forth between devices and cloud servers, network congestion is reduced, leading to more efficient use of bandwidth.

- Improved Reliability: Devices equipped with Edge AI can continue functioning independently even if they lose connection to the internet or experience network issues.

Applications of Edge AI

The potential applications for Edge AI are vast and varied across numerous industries:

- Healthcare: Wearable devices can monitor vital signs in real-time and alert medical professionals in case of anomalies without needing constant connectivity.

- Agriculture: Smart sensors can analyse soil conditions and crop health directly in the field, enabling farmers to make informed decisions about irrigation and fertilisation.

- Retail: Intelligent cameras can track foot traffic patterns in stores and adjust digital signage accordingly without sending video feeds over the internet.

- Smarcities: Traffic management systems can optimise flow by analysing real-time vehicle movement data at intersections without relying on cloud computing resources.

The Future of Edge AI

The development of more powerful processors specifically designed for edge computing is driving further innovation in this field. As these technologies become more accessible and affordable, it is expected that an increasing number of industries will adopt Edge AI solutions. Additionally, advancements in machine learning algorithms tailored for low-power devices will enable even more sophisticated applications at the edge.

The future holds exciting possibilities as we continue exploring how best to leverage this transformative technology across various sectors worldwide. As businesses seek ways not only improve efficiency but also enhance customer experiences through personalised services delivered instantaneously wherever needed most – all thanks largely due its ability operate independently from traditional cloud-based systems – there’s little doubt left regarding importance role plays shaping tomorrow’s intelligent landscape today!

Seven Essential Tips for Enhancing Edge AI Performance and Security

- 1. Consider the hardware constraints when designing edge AI solutions.

- 2. Optimize your models for efficiency to run smoothly on edge devices.

- 3. Use techniques like quantization and pruning to reduce model size for better performance on edge devices.

- 4. Implement local data processing to minimize latency in edge AI applications.

- 5. Ensure data security and privacy measures are in place for sensitive information processed at the edge.

- 6. Regularly update and maintain your edge AI systems to keep them secure and efficient.

- 7. Test your edge AI applications thoroughly across different scenarios to ensure reliability.

1. Consider the hardware constraints when designing edge AI solutions.

When designing edge AI solutions, it is crucial to consider the hardware constraints inherent in edge devices. Unlike traditional cloud-based systems with virtually unlimited computational power and storage, edge devices often have limited processing capabilities, memory, and energy resources. This necessitates optimising AI models to run efficiently within these constraints, ensuring they can perform real-time data processing without draining battery life or requiring excessive computational power. By tailoring algorithms to fit the specific hardware specifications of the device, developers can achieve a balance between performance and efficiency, allowing for seamless integration of AI functionalities at the edge while maintaining device longevity and reliability.

2. Optimize your models for efficiency to run smoothly on edge devices.

Optimising models for efficiency is crucial when deploying AI on edge devices, as these devices often have limited computational resources and power constraints. By streamlining algorithms and reducing the complexity of models, developers can ensure that AI applications run smoothly and responsively on edge hardware. Techniques such as model pruning, quantisation, and knowledge distillation can be employed to achieve this optimisation. These methods help in minimising the model size and computational load without significantly compromising accuracy. As a result, optimised models not only enhance performance but also extend the battery life of edge devices, making them more practical for real-world applications where connectivity to powerful cloud servers may be limited or unavailable.

3. Use techniques like quantization and pruning to reduce model size for better performance on edge devices.

When deploying AI models on edge devices, optimising for performance and efficiency is crucial due to the limited computational resources available. Techniques such as quantization and pruning play a vital role in achieving this optimisation. Quantization involves reducing the precision of the numbers used in a model, which leads to smaller model sizes and faster computation without significantly sacrificing accuracy. Pruning, on the other hand, eliminates redundant or less important connections within a neural network, thereby reducing its complexity and size. By employing these techniques, developers can ensure that AI models run more efficiently on edge devices, leading to improved performance and responsiveness in real-time applications. These optimisations are essential for enabling sophisticated AI functionalities in environments where processing power and memory are constrained.

4. Implement local data processing to minimize latency in edge AI applications.

Implementing local data processing is a crucial strategy in minimising latency for edge AI applications. By processing data on the device itself, rather than relying on a centralised cloud server, responses can be generated almost instantaneously. This is particularly important for applications where real-time decision-making is essential, such as autonomous vehicles or industrial automation systems. Local data processing not only reduces the time taken to transmit and receive data but also decreases the dependency on network connectivity, ensuring that devices can function effectively even in environments with limited or unreliable internet access. Additionally, this approach enhances privacy and security by keeping sensitive information on the device, reducing the risk of exposure during transmission. Overall, local data processing is a key factor in improving the efficiency and reliability of edge AI solutions.

5. Ensure data security and privacy measures are in place for sensitive information processed at the edge.

Ensuring data security and privacy measures are in place for sensitive information processed at the edge is paramount in the implementation of Edge AI solutions. As data is processed locally on devices, there is a reduced need to transmit information over potentially vulnerable networks, which inherently enhances privacy. However, this also necessitates robust security protocols to protect data on the device itself. Implementing encryption, secure boot processes, and regular software updates are essential steps to safeguard sensitive information from unauthorised access or cyber threats. Additionally, adopting privacy-by-design principles ensures that data protection is integrated into the architecture of Edge AI systems from the outset. By prioritising these measures, organisations can build trust with users and comply with regulatory requirements while fully leveraging the benefits of Edge AI technology.

6. Regularly update and maintain your edge AI systems to keep them secure and efficient.

Regularly updating and maintaining your edge AI systems is crucial to ensuring their security and efficiency. As technology evolves, new vulnerabilities can emerge, making systems susceptible to cyber threats. By keeping software and firmware up to date, potential security gaps can be closed, protecting sensitive data processed at the edge. Additionally, regular maintenance ensures that the system operates at optimal performance levels, incorporating the latest improvements in AI algorithms and processing capabilities. This proactive approach not only enhances the reliability of edge AI solutions but also extends their lifespan, providing a more robust return on investment for businesses relying on these advanced technologies.

7. Test your edge AI applications thoroughly across different scenarios to ensure reliability.

Thorough testing of edge AI applications across diverse scenarios is crucial to ensure their reliability and robustness in real-world environments. Unlike traditional cloud-based systems, edge AI operates on devices with varying capabilities and conditions, such as smartphones, sensors, and autonomous vehicles. These devices may encounter different network conditions, power constraints, and environmental factors that could affect performance. By simulating a wide range of scenarios during testing, developers can identify potential weaknesses and optimise the application to handle unexpected situations effectively. This rigorous testing process helps guarantee that the edge AI solutions are dependable, delivering consistent performance regardless of the challenges they face in dynamic settings.

No Responses