Data Processing and Analysis Methods: Unveiling the Power of Information

In today’s data-driven world, the ability to process and analyze vast amounts of information has become essential for businesses, researchers, and decision-makers across various industries. Data processing and analysis methods play a crucial role in extracting valuable insights from raw data, enabling us to make informed decisions, identify patterns, and drive innovation.

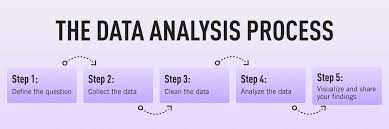

Data processing involves transforming raw data into a more usable format. It includes tasks such as data cleaning, integration, transformation, and aggregation. By applying these techniques, we can ensure that the data is accurate, consistent, and ready for further analysis.

One of the fundamental steps in data processing is data cleaning. This involves identifying and rectifying errors or inconsistencies in the dataset. It may include removing duplicate entries, dealing with missing values, or correcting formatting issues. By ensuring the quality of the data, we can minimize biases and inaccuracies that could affect subsequent analysis.

Once the data is cleaned and prepared, it is ready for analysis using various methods. Data analysis techniques can be broadly categorized into descriptive analytics, predictive analytics, and prescriptive analytics.

Descriptive analytics focuses on summarizing and visualizing the available data to gain a better understanding of past events or trends. It includes methods such as statistical measures (mean, median), visualizations (charts, graphs), and exploratory data analysis techniques. Descriptive analytics provides valuable insights into what has happened in the past but may not necessarily explain why it happened.

Predictive analytics takes us a step further by using historical data to make predictions about future outcomes. This method employs statistical modeling techniques like regression analysis or machine learning algorithms to identify patterns or relationships within the dataset. By leveraging these patterns, predictive analytics enables us to forecast future trends or behaviors with a certain level of confidence.

Prescriptive analytics goes beyond descriptive and predictive analytics by recommending actions or strategies based on the analyzed data. It uses optimization algorithms, simulation models, and decision support systems to guide decision-making processes. Prescriptive analytics helps organizations make informed choices by considering multiple factors, constraints, and potential outcomes.

In recent years, advancements in technology have revolutionized data processing and analysis methods. Big data technologies, cloud computing, and artificial intelligence have enhanced our ability to handle massive datasets and extract meaningful insights efficiently. These technologies enable us to process data in real-time, perform complex calculations rapidly, and uncover hidden patterns that were previously inaccessible.

Moreover, the integration of data from diverse sources has become more seamless with the development of application programming interfaces (APIs) and data integration platforms. This allows organizations to combine internal and external datasets for a more comprehensive analysis, leading to deeper insights and better decision-making.

In conclusion, data processing and analysis methods are indispensable tools for unlocking the power of information. By employing these techniques effectively, we can transform raw data into valuable insights that drive innovation, optimize processes, and shape the future. As technology continues to advance rapidly, it is crucial for businesses and researchers alike to stay updated with the latest methodologies in order to harness the full potential of their data resources.

Understanding the Context: Key to Successful Data Analysis

Selecting the Right Tools: A Crucial Step in Data Processing

3. Simplicity is Key: Optimal Techniques for

- Get to know the data – take time to understand the context of the data and its structure before attempting any analysis or processing.

- Choose appropriate tools – ensure you have the right tools for the job, such as statistical software packages or spreadsheets for data cleaning, manipulation and analysis.

- Keep it simple – don’t overcomplicate your methods; use straightforward techniques that are easy to explain and interpret.

- Validate your results – always check that your results are valid by running tests on a sample of the data and comparing them with known outcomes.

- Document your methods – make sure you record all steps taken in processing and analysing data, so that others can understand what was done if needed in future.

Get to know the data – take time to understand the context of the data and its structure before attempting any analysis or processing.

Get to Know the Data: Unveiling the Key to Effective Analysis

When it comes to data processing and analysis, one crucial tip stands out among the rest: get to know your data. Taking the time to understand the context of the data and its structure before diving into any analysis or processing is essential for accurate and meaningful results.

Data can come in various formats, from spreadsheets and databases to unstructured text or sensor-generated streams. Each dataset has its unique characteristics, including variables, relationships, and potential biases. By familiarizing yourself with these aspects, you can make informed decisions about how best to handle and analyze the data.

Firstly, understanding the context of the data is vital. Ask yourself questions such as: What is the source of this data? Is it from a survey, transactional records, or experimental observations? Knowing where the data comes from helps you gauge its reliability and potential limitations. It allows you to assess whether it aligns with your research goals or business objectives.

Next, delve into the structure of the data. Identify key variables or attributes that are relevant to your analysis. Are there any missing values? Are there outliers that need special attention? Understanding these nuances will help you determine how much pre-processing is required before conducting any analysis.

Moreover, consider any inherent biases within the dataset. Bias can arise due to factors such as sampling methods or measurement errors. Recognizing these biases early on enables you to account for them during analysis and avoid drawing incorrect conclusions.

Taking time to explore and visualize your data can provide valuable insights into patterns, trends, and potential outliers. Visualization techniques like histograms, scatter plots, or box plots can help reveal distributional characteristics or relationships between variables. This exploration phase allows you to identify any anomalies that may require further investigation.

By getting acquainted with your data beforehand, you set a solid foundation for subsequent processing and analysis tasks. It helps streamline your workflow by ensuring that subsequent steps are tailored to the specific characteristics of the dataset, saving time and effort in the long run.

Additionally, understanding the context and structure of your data fosters transparency and reproducibility. It enables you to document your data processing and analysis steps accurately, making it easier for others to replicate or validate your findings.

In conclusion, taking the time to get to know your data is a vital step in data processing and analysis. By understanding the context, structure, and potential biases within the dataset, you can make informed decisions about how best to handle and analyze it. This approach enhances the accuracy and reliability of your results, leading to more meaningful insights that drive informed decision-making. So remember, before you embark on any analysis journey, take a moment to get acquainted with your data – it’s a small step that yields significant rewards.

Choose appropriate tools – ensure you have the right tools for the job, such as statistical software packages or spreadsheets for data cleaning, manipulation and analysis.

Choose Appropriate Tools: Unlocking the Potential of Data Processing and Analysis

In the realm of data processing and analysis, having the right tools for the job can make all the difference. Whether you are a researcher, a business professional, or an aspiring data scientist, selecting appropriate tools is crucial for efficient and accurate data cleaning, manipulation, and analysis.

One of the first steps in any data-related task is data cleaning. This process involves identifying and rectifying errors or inconsistencies in the dataset to ensure its quality. To accomplish this effectively, it is essential to choose appropriate tools that offer features specifically designed for data cleaning.

Statistical software packages are widely used in various fields for their robust capabilities in handling complex datasets. These packages provide a range of functions and algorithms tailored to perform tasks such as identifying outliers, dealing with missing values, and detecting inconsistencies. Packages like R, Python’s pandas library, or SPSS are popular choices among researchers and analysts due to their extensive functionality in data cleaning.

Spreadsheets also play a significant role in data processing and analysis. Programs like Microsoft Excel or Google Sheets offer user-friendly interfaces with built-in functionalities that allow users to manipulate and transform data effortlessly. Spreadsheets provide features like sorting, filtering, conditional formatting, and formula calculations that facilitate tasks such as merging datasets or performing basic statistical analyses.

For more advanced analysis tasks that involve complex statistical modeling or machine learning algorithms, specialized software packages such as SAS, Stata, or MATLAB can be invaluable. These tools provide an extensive range of statistical methods along with powerful programming environments that enable users to conduct sophisticated analyses efficiently.

Additionally, with the advent of open-source software and libraries like scikit-learn or TensorFlow, aspiring data scientists have access to powerful machine learning frameworks that facilitate complex analyses without significant financial investment.

When choosing tools for data processing and analysis methods, it is essential to consider factors such as ease of use, scalability, compatibility with your data format, and the specific requirements of your project. It is also worth exploring online communities, forums, and tutorials dedicated to the tools you are considering to gain insights from experienced users and stay updated with the latest features and advancements.

In conclusion, selecting appropriate tools is a critical aspect of successful data processing and analysis. By ensuring you have the right software packages or spreadsheets at your disposal, tailored to your specific needs, you can streamline your workflow, enhance efficiency, and unlock the full potential of your data resources. So choose wisely, and let the power of appropriate tools propel you towards insightful discoveries and informed decision-making.

Keep it simple – don’t overcomplicate your methods; use straightforward techniques that are easy to explain and interpret.

Keep it Simple: The Power of Straightforward Data Processing and Analysis Methods

When it comes to data processing and analysis, simplicity can be a powerful approach. While complex methods may seem impressive, using straightforward techniques that are easy to explain and interpret can lead to more effective results. Here’s why keeping it simple is a valuable tip in the world of data analysis.

Firstly, simplicity enhances understanding. By using straightforward methods, you ensure that your analysis is accessible to a wider audience. When presenting your findings or explaining your methodology, simplicity allows others to grasp the concepts more easily. This promotes collaboration and enables effective communication between team members or stakeholders who may not have an extensive background in data analysis.

Moreover, simple techniques often result in clearer insights. Complex methods can sometimes lead to convoluted or intricate outputs that are challenging to interpret. On the other hand, straightforward techniques generate results that are easier to understand and provide actionable insights. This clarity facilitates decision-making processes and allows for more efficient implementation of strategies based on the analysis.

Another advantage of simplicity is its efficiency. Overcomplicating methods can consume unnecessary time and resources. By opting for straightforward techniques, you streamline the data processing and analysis workflow, saving valuable time and effort. This efficiency is particularly crucial when working with large datasets or tight deadlines.

Furthermore, simple methods tend to be more robust and reliable. Complex algorithms may introduce additional layers of uncertainty or assumptions into the analysis process. In contrast, straightforward techniques often rely on well-established principles that have been thoroughly tested over time. This reliability ensures that your results are more accurate and trustworthy.

Lastly, simplicity encourages reproducibility and transparency in research or business practices. When using straightforward techniques, it becomes easier for others to replicate your work or validate your findings independently. This fosters a culture of transparency within the field of data analysis and promotes scientific integrity.

In conclusion, keeping it simple when it comes to data processing and analysis methods can yield significant benefits. By using straightforward techniques, you enhance understanding, generate clearer insights, improve efficiency, ensure reliability, and promote reproducibility. So, the next time you embark on a data analysis project, remember the power of simplicity and choose methods that are easy to explain and interpret.

Validate your results – always check that your results are valid by running tests on a sample of the data and comparing them with known outcomes.

Validate your Results: Ensuring the Accuracy of Data Processing and Analysis

In the realm of data processing and analysis, it is crucial to validate the results obtained from our methodologies. Validating results involves running tests on a sample of the data and comparing them with known outcomes, ensuring that our findings are accurate and reliable.

Why is result validation important? Well, data processing and analysis involve complex algorithms, statistical models, and various assumptions. Errors or biases can easily creep into the process, leading to misleading or incorrect conclusions. By validating our results, we can gain confidence in the accuracy of our findings and make informed decisions based on reliable information.

One way to validate results is by using a sample of the data. Instead of analyzing the entire dataset, we select a representative subset that captures its essential characteristics. By applying our data processing and analysis methods to this sample, we can evaluate whether our results align with what we expect or know to be true.

Comparing these results with known outcomes provides a benchmark for accuracy assessment. For example, if we are predicting sales figures based on historical data, we can compare our predictions against actual sales figures for a specific period. This allows us to gauge how well our models perform in real-world scenarios.

Validating results also helps identify potential issues or anomalies in the dataset. If there are discrepancies between expected outcomes and our analysis results, it prompts us to investigate further. It may uncover errors in data collection or preprocessing that need rectification before proceeding with full-scale analysis.

Moreover, result validation fosters transparency and reproducibility in research or business practices. By documenting the validation process and sharing it with others, we encourage peer review and collaboration. It allows others to replicate our analysis methods and verify the validity of our findings independently.

To ensure effective result validation:

- Define clear validation criteria: Establish specific metrics or benchmarks against which you will compare your results. This could be accuracy rates, error margins, or other relevant measures.

- Use diverse validation techniques: Employ a combination of quantitative and qualitative methods to validate your results. This could include statistical tests, visual inspections, or expert opinions.

- Be mindful of sample selection: Ensure that the sample used for validation is representative of the overall dataset. Random sampling or stratified sampling techniques can help achieve this.

- Document your validation process: Keep a record of the steps taken during result validation, including the chosen methods, assumptions made, and any limitations encountered. This documentation enhances transparency and facilitates future replication.

In conclusion, validating results is an essential step in data processing and analysis. By running tests on a sample of the data and comparing them with known outcomes, we can ensure the accuracy and reliability of our findings. Result validation not only strengthens our confidence in the results but also promotes transparency and fosters collaboration within the scientific and business communities.

Document your methods – make sure you record all steps taken in processing and analysing data, so that others can understand what was done if needed in future.

Document Your Methods: Ensuring Transparency and Reproducibility in Data Processing and Analysis

In the realm of data processing and analysis, documenting your methods is an essential practice that can greatly benefit both yourself and others who may need to understand or reproduce your work in the future. By recording all the steps taken during the process, you ensure transparency, facilitate collaboration, and promote reproducibility.

One of the key advantages of documenting your methods is transparency. By clearly documenting each step involved in processing and analyzing data, you provide a clear roadmap for others to follow. This transparency helps build trust in your work and allows others to verify your findings or replicate your results. It also enables easier collaboration with colleagues or researchers who may be working on similar projects or datasets.

Moreover, documenting your methods ensures that you have a comprehensive record of your own work. It serves as a valuable reference that you can revisit later if needed. It helps you recall specific details, parameters, or decisions made during the process, preventing any loss of information over time. This documentation becomes particularly useful when revisiting a project after some time or when sharing your work with others.

Furthermore, recording all steps taken during data processing and analysis promotes reproducibility. Reproducibility is crucial for scientific research as it allows others to validate or build upon previous findings. By providing detailed documentation of your methods, including software tools used, parameters set, and any modifications made to algorithms or models, you enable others to reproduce your work accurately. This not only enhances the credibility of your research but also contributes to the advancement of knowledge in the field.

To effectively document your methods, consider using a systematic approach. Start by maintaining a detailed logbook or lab notebook where you record each step chronologically as you progress through data processing and analysis tasks. Include information such as data sources and formats used, preprocessing techniques applied (e.g., cleaning, integration), software tools employed (with versions), and any custom scripts or code developed. Additionally, document any assumptions made, parameter values chosen, and reasoning behind specific decisions.

In addition to a logbook, consider creating supplementary documentation such as README files or method descriptions that provide a high-level overview of your approach. These summaries can help others quickly grasp the key steps involved in your data processing and analysis pipeline.

In conclusion, documenting your methods in data processing and analysis is crucial for ensuring transparency, facilitating collaboration, and promoting reproducibility. By recording all steps taken during the process, you create a valuable resource for yourself and others to understand and reproduce your work accurately. Embrace this practice as an integral part of your workflow to enhance the credibility of your research and contribute to the collective advancement of knowledge in the field.

No Responses